Heaven's Few Retrospective 1 - Ideation to Prototyping

Preface

'Heaven's Few' is a game for VR that I've been slowly iterating on since 2019. The game is still in pre-production stage, with the goal of delivering a playable vertical slice running on Meta Quest. Since the history of this idea is spread out over time and scattered across old notes, screenshots, and Discord messages, this retrospective is me collecting everything into one place and looking back at all the exploration and learning I've done so far. I don't really go into the details of the game itself here, but more about how I grew this idea over time and the train of thought that I've had about this game from 2019 all the way to today.

Introduction

Sometime in 2019, I sketched out a concept for a VR game where a player would move the entire room around them. The inspiration came when I was using Oculus Medium to smooth out some gaps in a RealityCapture mesh. The mesh was a reconstruction of one section's interior near my high school, so I found myself wandering this virtual mall, spinning it, zooming in and out, and "moving" through it by dragging the mesh towards or away from me. I started to notice that this kind of interaction was really fun and encouraged meaningful exploration. Maybe more importantly, it was a novel and unexplored interaction that only felt natural in virtual reality and nowhere else. So, could this be a good game?

The below clip is photogrammetry expert Azad Balabanian moving a room around in Oculus Medium. It looks like he's moving around the room, but he's actually moving the room around him (link).

The first note I made explaining this idea.

2020: First prototype, and inspirations from Half-Life: Alyx

This was still early in my game development learning journey and I still didn't really know what I was doing, but I started to see if I could at least develop a working prototype demonstrating the mechanic I had in mind. I remember a lot of struggle just getting the headset and controllers to work with the prototype, first setting up the stuff that would make a WMR headset work in Unity (was using the Lenovo Explorer at this time), then setting up the input to go through Steam Input so that I could use SteamVR features like button remapping. The prototype that ultimately came out of this had an incomplete version of the game mechanic where the player could not rotate the room, but I was able to put the player mid-air in a room with physics objects, and they could drag the room around by holding grip/trigger and moving the controller around, and that would make the physics objects bounce around accordingly. Unfortunately I couldn't get any input to work when I booted it up recently through Virtual Desktop to my Quest 2, so I don't have good footage to show here. In February 2020 I uploaded this build of the prototype (link) to Itch.

Half-Life: Alyx spoiler below, skip to the next heading if needed.

Shortly after this in March was when Half-Life: Alyx released. Mild gameplay spoilers, but if you've played the game you know that some later levels have some trippy layouts that play with orientation and gravity in weird ways, so of course I wanted to dig deeper into this as reference for my own project. A half-working version of Hammer 2 (Source 2's map editor) was leaked somewhere on Reddit so I spent some time exploring that, and shortly afterward the official HL:A Workshop Tools dropped. I'll just quote a summary from my "two years of CS" post:

Following the release of the Half-Life: Alyx Workshop Tools, I wanted to see how the later anti-gravity levels worked and see if I could make my own. I had thought that maybe the gravity of parts of the level had actually changed and affected which direction the player would stand. The actual implementation is a bit more hacky than that: rooms were rotated so its "floor" would be a wall/ceiling, giving the illusion of anti-gravity, and forces would be applied to parts of the level so that objects would "fall" in ways that would disobey gravity. I played around with this a little bit with a custom level, throwing shopping carts at walls and using force points to make them stick. Once I rebuild my PC maybe I'll include some footage.

Shown below is one of the Half-Life Alyx's trippiest rooms exposed: forces are added at different parts of the hallway to make some objects "fall" towards the upper "flipped" hallway's floor link to level footage (29:38).

Not sure if I ever recorded footage or if I even have that custom level anymore. Part of my dive into the HL:A Workshop Tools was to see if I could actually just build this game in Source 2 or at least as a HL:A mod, but ultimately it didn't seem flexible enough to build the foundation I needed, and at this point I don't think we're getting full Source 2 access the same way we have access to Unity and Unreal.

From here the progress of this idea died out for a bit; the pandemic was in full force and I had moved away from college back to my parent's house. I had a lot of time to spare for a couple months but I had school to finish and wanted to explore some other stuff around then. What ultimately froze the project though was that in July I got into a serious relationship, and various aspects of it took up a lot of my time for the remainder of 2020.

2022 - Initial ideas on vibe and story, and starting to piece things together

Portal and the pillars of a great game

A lot of other things made up my life throughout 2021, but the idea of this game continued to linger in the back of my mind. Around late 2021 and early 2022 things were cooling down, I had more time to use my PC and more time to organize my mind and my goals, including this game. During this time I was able to think on big aspects that I hadn't really thought of much before: what would this game actually look like, and be about? And what should I name it??

The first Portal is my favorite game of all time and is kind of the base reference for what I would want to make in almost every aspect. This is really generalizing it, and is all pretty obvious, but these things are the crucial pillars of a game I would be proud to make, all equally important:

- Novel gameplay mechanics that challenge and reshape the way players think about the real world.

- A compelling world and story that should also challenge a player's thinking, and provide a context to the gameplay that encourages players to think deeper and engage meaningfully with their play.

- A visual aesthetic that is beautiful and cohesive, and directs the emotional context for the story and gameplay by evoking certain moods. Portal would just not hit the same without that cool-toned blue-gray atmosphere that makes you feel the inhumanity of the test chambers.

- On the technical side, performant and free of significant bugs. I like games that optimize for weaker hardware like mobile platforms and take creative approaches to maximizing smaller performance budgets.

Of course, hitting all four of those points successfully is ridiculously hard and requires the right people in the right place at the right time, but I'm still allowing myself to shoot for the moon here with the hope of landing on a close-enough star.

Initial ideas for the game started off as the typical Portal-like vibe - you're in a lab, you're in test chambers that test the room-moving mechanic in various ways, you slowly uncover why you're in the test chambers and are compelled to break out of the lab, etc. It's definitely hard to break out of the "Portal-like" tropes if you have a game that revolves around gameplay mechanics that break traditional rules of spatial navigation; it's convenient to just explain it away as either magic or experimental technology. Even fast-forwarding to today in 2024, the basic ideas for Heaven's Few are still pretty much that. It did bother me for a while how easily I was falling into these tropes, but nowadays I'm okay with it and I'll just try to nail the execution and keep things interesting.

There was another early idea closer to my experiences in Oculus Medium - what if the game was set inside a program like that, and I'm inside the 'editor' exploring various reconstructions of real places? And maybe I slowly uncover the dark secrets and lost souls that haunt these places.. honestly that still sounds pretty cool, but I think what turned me off from it was that I would have to create highly detailed levels that should basically look like photorealistic 3D scans, otherwise the whole premise might be lost on people. Honestly though I still like the idea of a game set inside of an editing program, I think Sam Barlow mentioned in his interview on the AIAS Game Maker's Notebook podcast of an idea for a horror game set inside of an interactive architectural blueprint, as a novel way to reduce art design load.

I think the first name I thought of was simply "TURN", referring to how the player could turn levels around themselves. I also thought of "Shift". I wasn't really happy with either so most of the docs around this time refer to the game as "turn prototype". The first prototype I mentioned above is called "shift vr prototype" on Itch, but you can see that the .exe name is "turn1". I had to call it something simple and this worked for now, and reinforced to me that this was still very much in prototype phase.

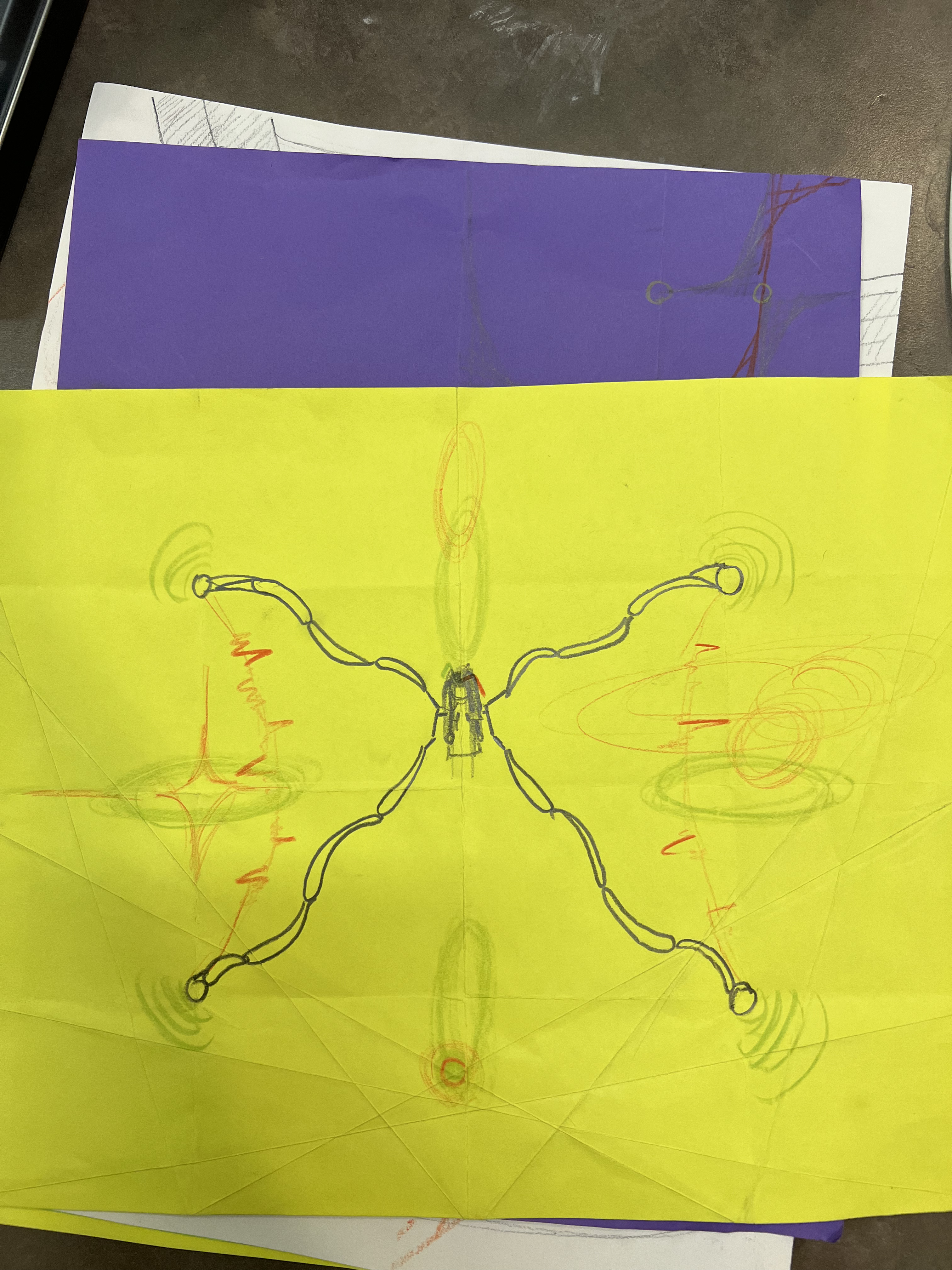

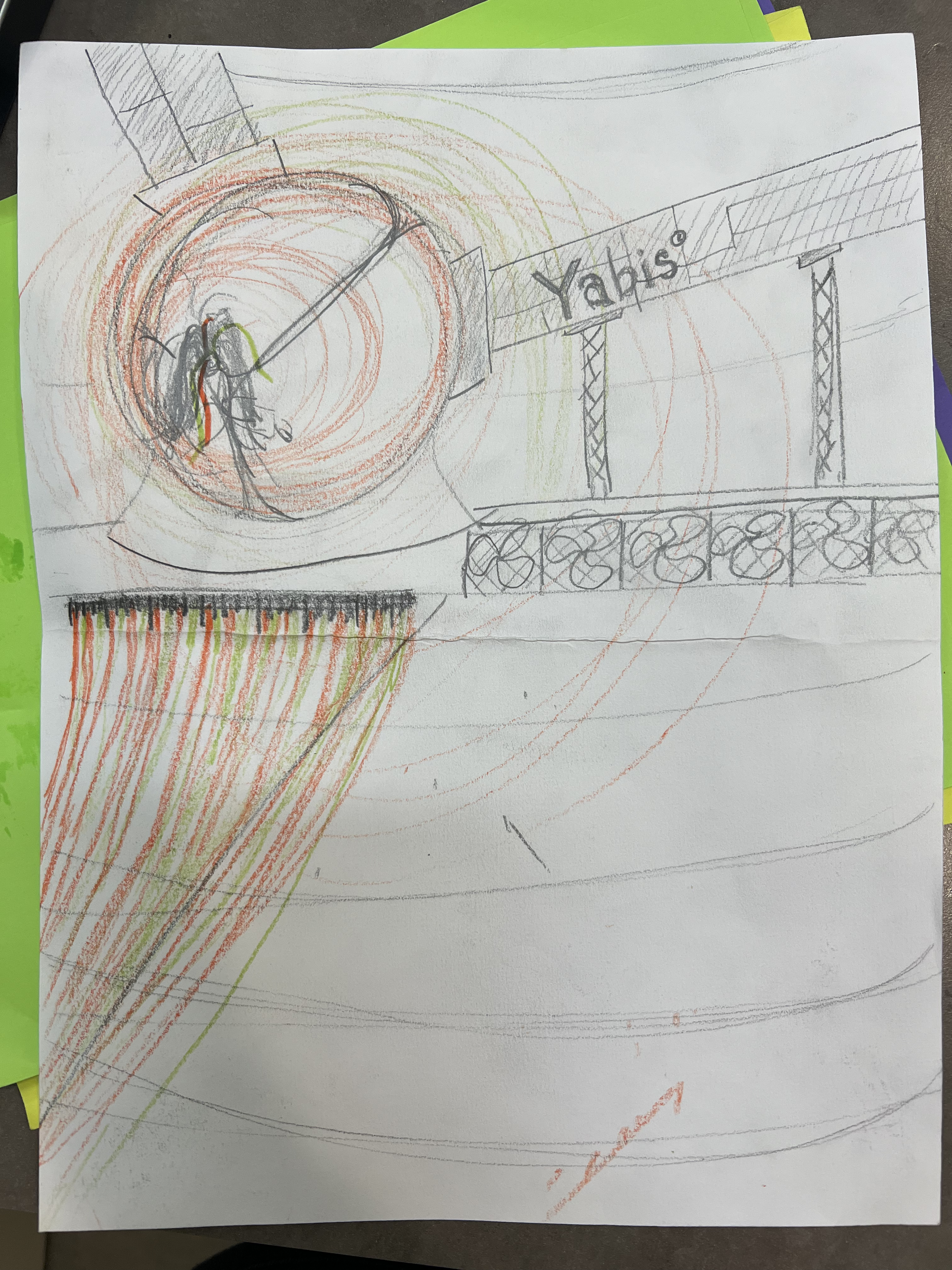

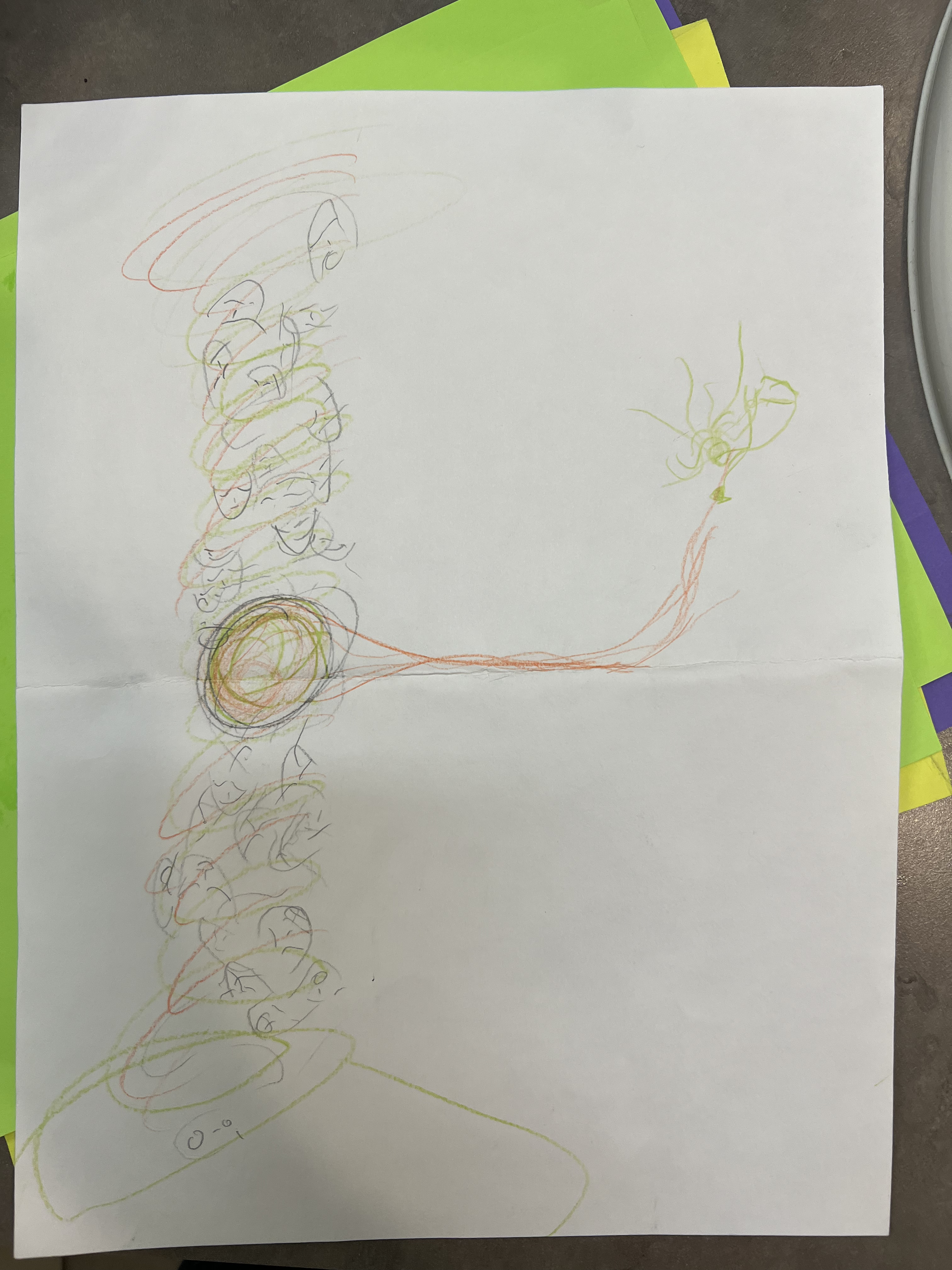

Early 'concept art'

I made some drawings around this time to feel out what kind of vibe I wanted for the game. Drawing is not my strong suit so uh.. keep an open mind about these.

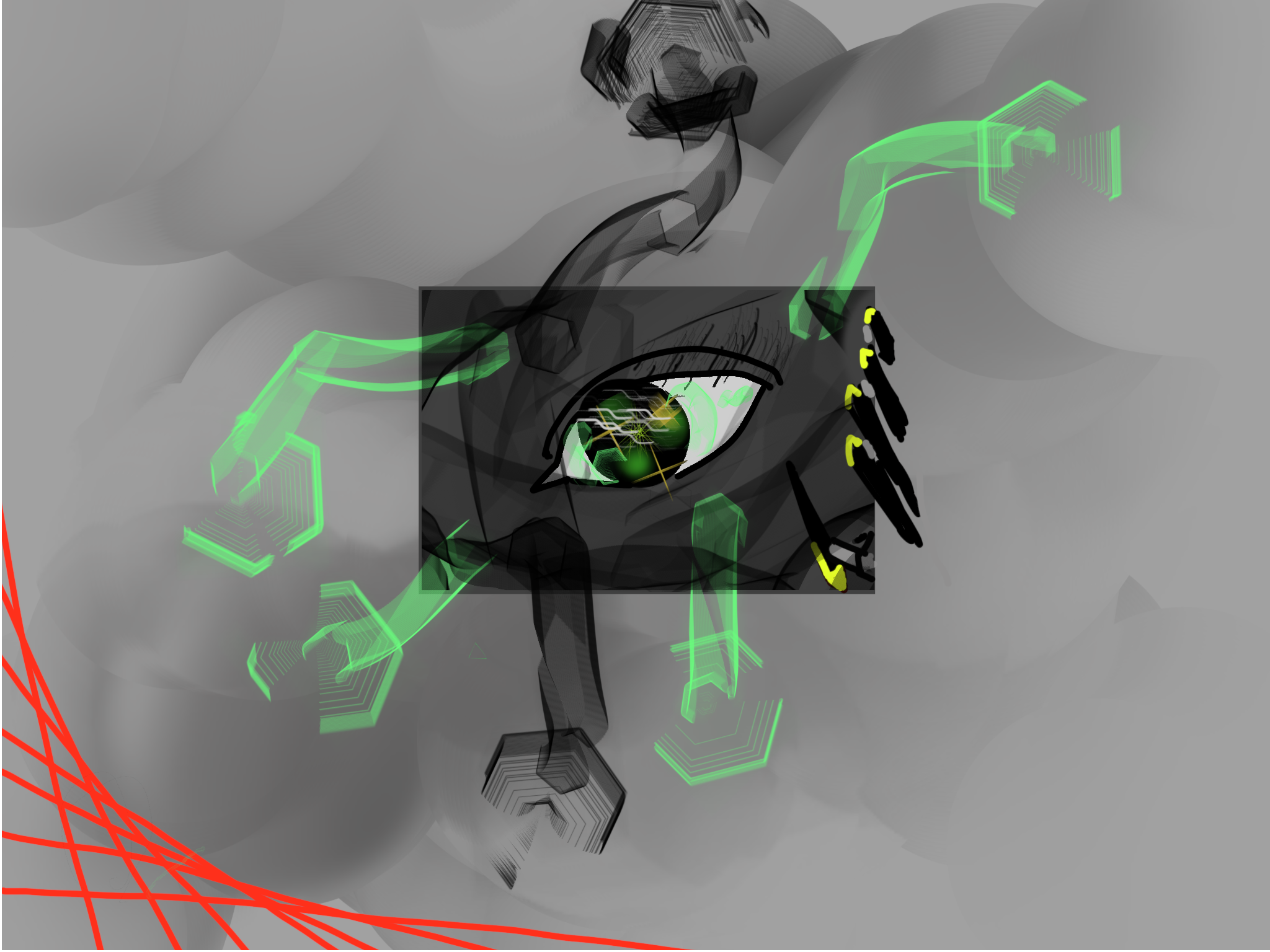

Cover art idea, it's you against the opps.

Cover art idea, it's you against the opps.

Was trying to evoke a grittier, funkier take on 2000s cybergoth type stuff with these two. the spikes on the right side of the last picture are big fingers with long neon nails.

Was trying to evoke a grittier, funkier take on 2000s cybergoth type stuff with these two. the spikes on the right side of the last picture are big fingers with long neon nails.

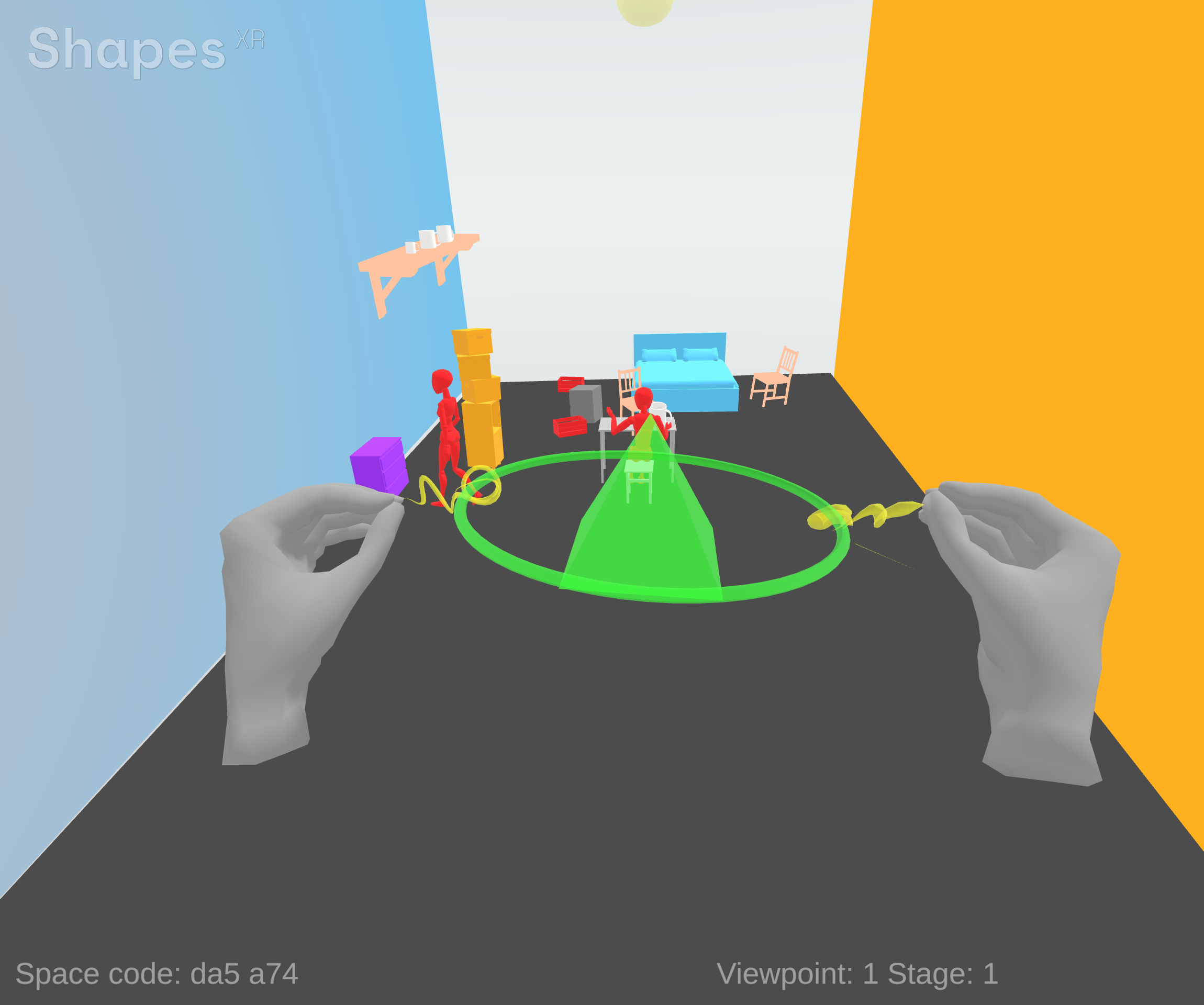

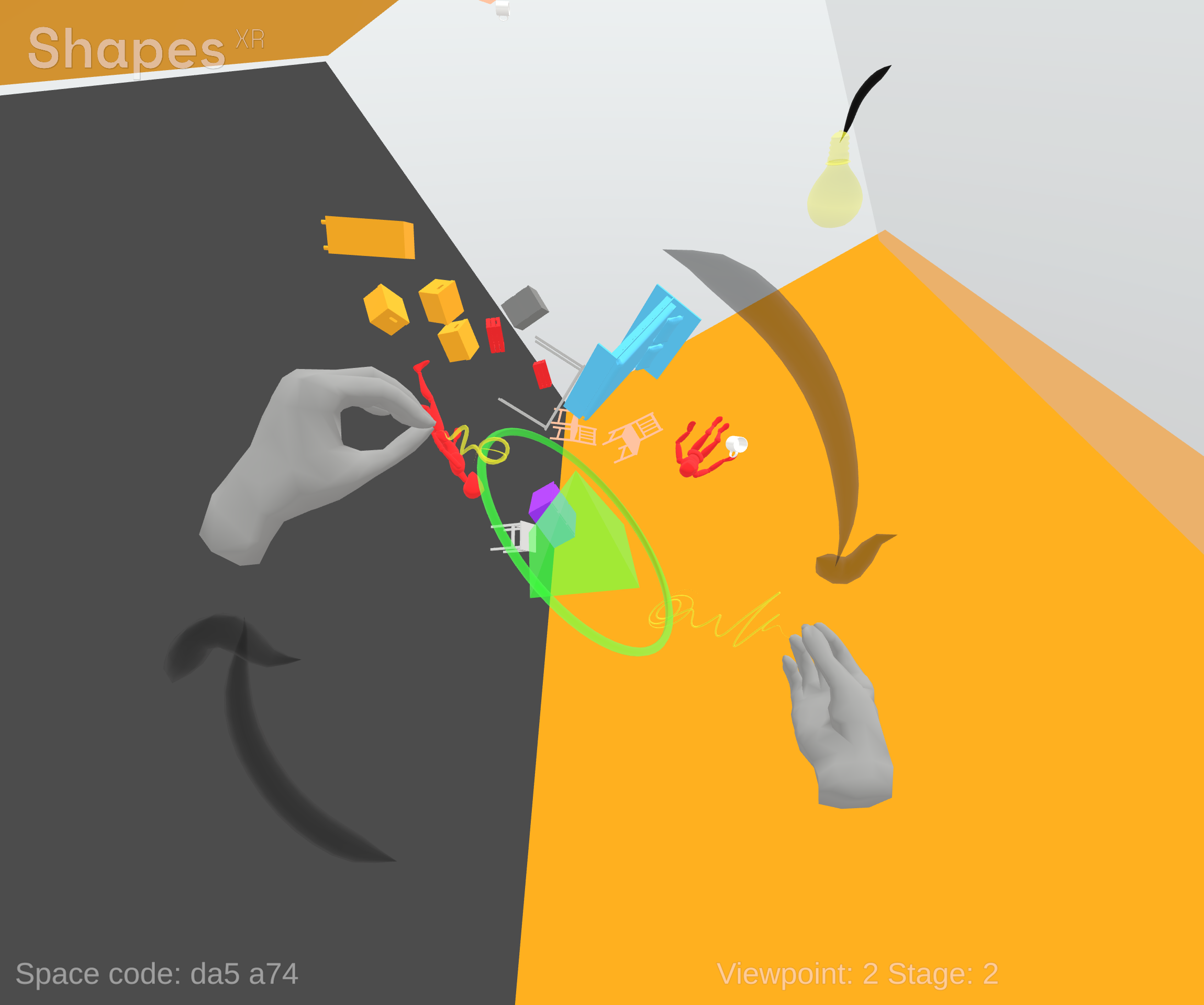

I also made this quick sketchout in shapesXR, a 3D editing tool for Meta Quest. This probably demonstrates the gameplay concept better than anything else I've shown.

First draft of the design document

I watched Mark Cerny’s 2002 “Method” talk at DICE around this time and realized I should probably lay out the foundation of a design document so ideation can be more focused and categorized. Definitely worth checking it out by the way, the main thing I remember is the distinct difference between the exploration of pre-prod/prototype stage versus the defined goals a team should have if an idea is good enough to take to production stage.

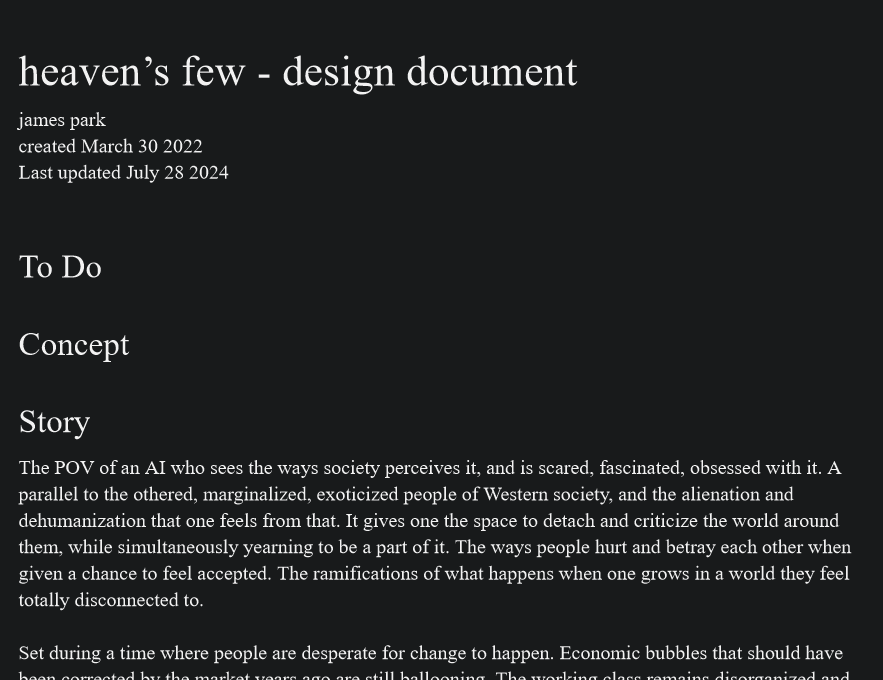

On March 30 2022 I created the Google doc that would be the design document. This is still what I’m adding onto now as I get more ideas, eventually I’ll have to do a pass where I remove ideas that didn’t stick and move more detailed material into separate documentation. In this first draft I basically just added categories based on an example game design document I found online, and copied in my notes and random ideas where it felt appropriate.

Level idea I posted early on in the doc.

Screenshot of the top of the doc now.

At this point I continued to try building on a Portal-type story, even down to containing the game mechanic into a tool the player wields. It was called the T.R.A.Y. aka the Tool for Revolving Around You, it would look like a tray and you would be able to shift the room around by moving the T.R.A.Y. the way you’d want the room to move.

Quote from the document:

tool for doing the abilities - starting to realize the “portal gun”/“the gameplay mechanic is a tool” trope is really overused, if you have that then your story basically always starts in a research lab or something lol

- the tool can be damaged by enemies

- can use as melee weapon

- or idk should it be like an eyeball? w an iris color that changes based on health

I would continue adding ideas here and there, but life would get in the way again and I hadn’t even started a new prototype yet.

Around July I started working full-time again, and in August I got into my current job as an SDE at Amazon Web Services. I thought this would pretty much kill off any chance to work on the game, but 2023 ended up being the year when prototyping finally took off.

2023: Prototyping in Unity, but then…

Bonelab, Trenchbroom, planning the year

Late 2022 was when Bonelab and the Marrow SDK had come out. I figured this could be a good way for me to quickly jump back into Unity and test out VR game ideas via Bonelab modding. The Marrow SDK features were really limited at launch though without installing additional mod tools.

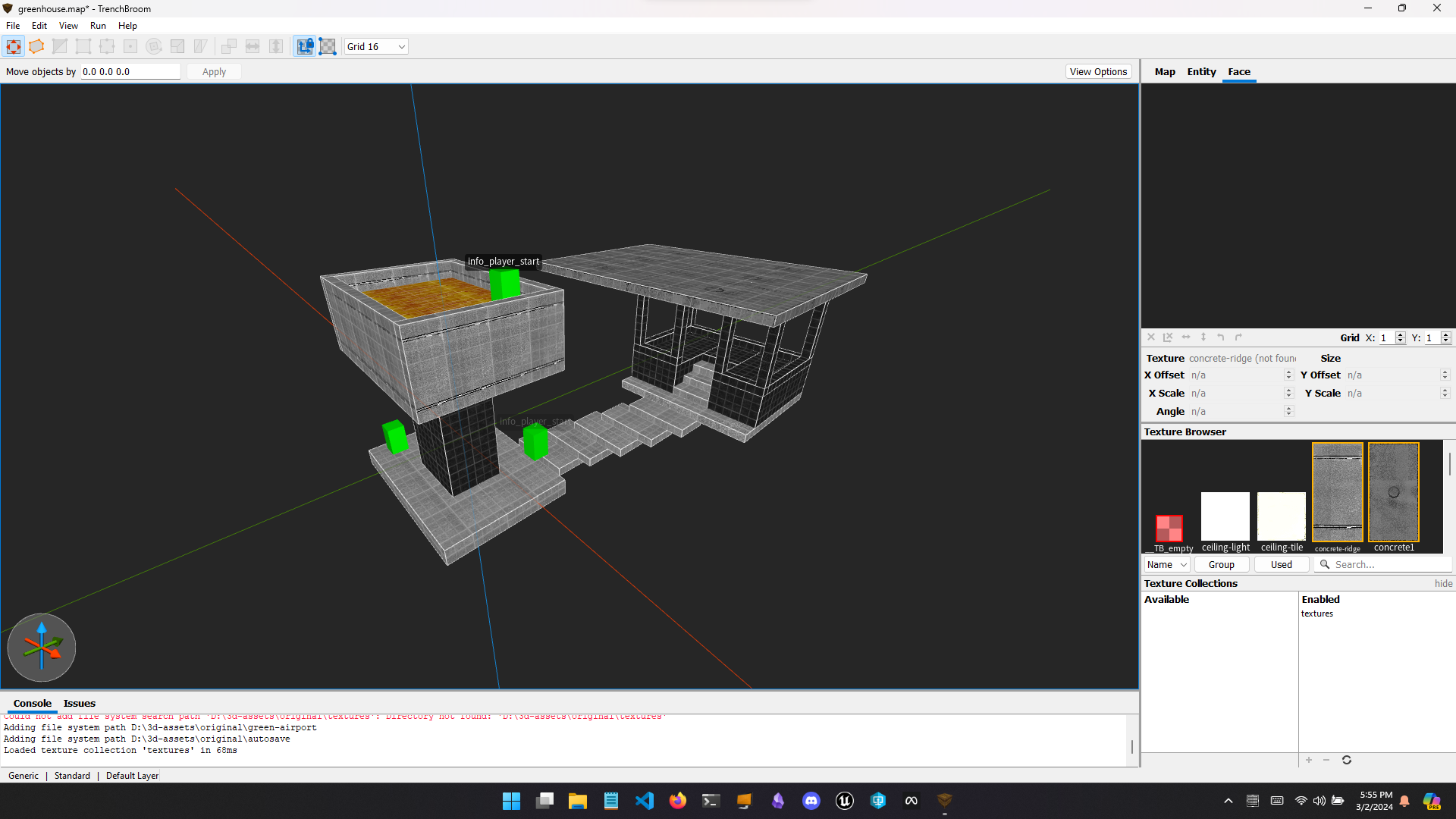

A bit earlier on I was introduced to Trenchbroom, an open source 3D modeling tool intended for Quake and GoldSrc maps. A big concern I had before starting the game was deciding which tools could help me cover my weaker areas of gamedev faster, and I was looking to Trenchbroom as a solution for me to quickly block out and texture levels. Previously I would use ProBuilder in Unity for everything, but it was pretty clunky for doing anything more detailed than a really basic blockout. I also bought and tried the UModeler addon later but it still wasn’t quite what I needed for map-making (it seems pretty good though if you stick with it). The typical choice here would be fully diving into Blender but I still found it overwhelming and didn’t want to spend too much time learning it when I already needed to dive into Unity. As a test for Trenchbroom being a viable option, I made a couple test maps and also tested the Trenchbroom-to-Unity workflow by importing the first map of Quake into TB then importing that to Bonelab.

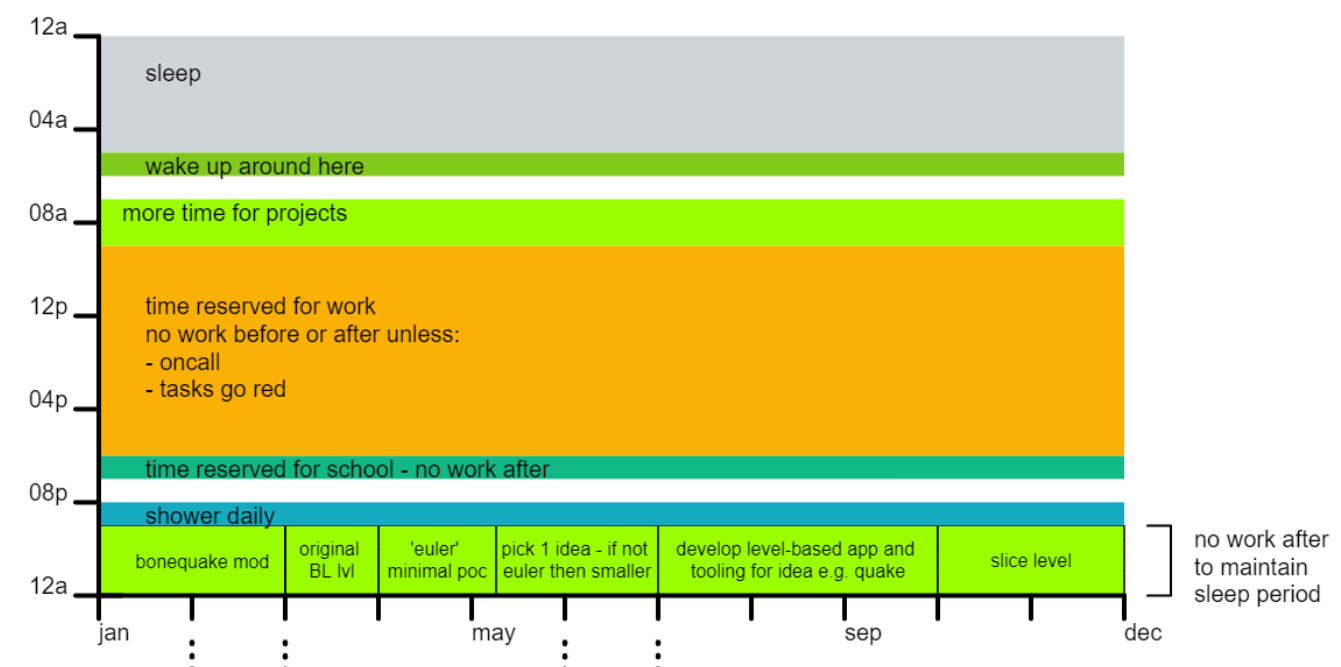

Early in the year I made a whole plan of how I would spend my time, this is what the schedule looked like:

This fell apart around March but it proved to be really helpful throughout the year to remind myself what I had planned on doing, and I think it did push me to do more with my time than I otherwise would have. 'Euler' was another name for the project. The 'level-based app' I mention was the idea that is implemented in Quake, Source games like Half-Life, and many other games - I wanted there to be the foundation of the game, and then levels that you could download as files and just put in a folder in the game to play, and levels would be self-contained files/folders that keep their assets separate from the game foundation.

Sharing progress, new prototypes, pulling in inspirations for story

Around April or May, my good friend Mark and I had the idea to share progress on our respective projects every Saturday so we could keep each other accountable on progress. This pushed me to finally develop a prototype of the game idea that would make the core mechanic playable and produce a recording of the prototype that could explain the mechanic. I've made a couple more prototypes since then, but in some ways I feel like this first one was the coolest and was the clearest in terms of demonstrating the core mechanic of moving the room. The more I've fleshed it out in future prototypes, the more obstacles I would run into during development that would result in a game that would not show the mechanic as effectively. In mid-July I recorded this gameplay of the prototype (link).

This was a standalone Unity project that utilized the Meta hand-tracking features that had recently released then. A pinch gesture would 'freeze time' (actually just turning off physics simulation of all level objects) and I could rotate the level with my hand.

Our talks also encouraged me to think more seriously about the story and aesthetic of the game. I was (and still am) really into the y2k aesthetic and also the 2000s 'rave' style and atmosphere; think the beginning of The Matrix and the club scene in Pusher. I like those lighting rigs they have on DJ stages and having them beam green and red rays everywhere.

For lore and setting details, I decided to pull some elements from a worldbuilding exercise I did in college. I took a class called "Introduction to Game Narrative" or something like that (shoutout Prof. Chris Vicari at Fordham!!!) where we were introduced to the art of worldbuilding and Dungeons and Dragons -style DMing, to push us to think about how games can deliver narratives in ways that other mediums cannot. The final project for the first half of the semester had us develop a "world bible" describing a world that could facilitate roleplaying in TTRPG style.

I took this as an opportunity to flesh out a take on 'AI surpasses humanity' that I've thought about since high school, and thought even more about in 2019. It was the year of GPT-2, invasive data collection, and the mainstream emergence of this idea that AI is a real thing we should worry about. It became widely understood that online data is used for purposes beyond entertainment and communciation. Alongside its use cases for surveillance and advertising, it is valuable for AI LLM models that can 'grow' by training on social media and all the data that humanity has enthusiastically created and shared online for the last couple decades.

Long story short, I created an entire lore bible for a hypothetical TTRPG called "BEINGS OF RECREATION", with "RECREATION" meaning re-creation, not pickleball or walks on the beach. The main idea was that in 2500, the characters would be experimental AI models that were individually trained on different historical datasets, to manifest the 'personalities' of different Internet demographics. So there would be one trained on 2016 front-page Facebook content and ultimately be kind of like your average American right-leaning Gen X mom, and another one would be trained on 2012 4chan posts and have a more cynical personality and sharp sense of humor. In a similar way that Hyperdimension Neptunia's characters are manifestations of different game consoles living in their own world, this world had manifestations of internet subcultures living amongst each other. These AIs would have a sense of geography by treating servers as spaces and datacenters as the "cities" of this virtual layer of Earth.

Quote from the world bible:

These beings tribalize themselves within the models they learned from, segregated by year. The culture of each site, their memes, jokes, concerns, emotions, interests, are the religion and culture of these beings. It is all they know as they do not inhabit a physical world. Every memory of each individual is shared by everyone else of the same model (but again every being has an individual instance of thought) When a Being is born, it starts with the intelligence of their Model. HOWEVER, new memories and thoughts created are individual, and thus every Being grows differently from their initial model over time. The culture within each model is already incredibly rich which encourages same-Models to stick together. Some are more open to new ideas than others.

Was I cooking something interesting here or just fresh off an acid-induced period of mania that lasted months? Anyways I would think about this world every now and then, and fast-forwarding to 2023, the memory of this assignment made me wonder if playing as an AI could be an interesting game story. I wrote this all down when it came to me, and here is where the title "Heaven's Few" was first thought of, referring to a group of AI models feared and worshipped by society as they are the first to demonstrate capabilities beyond human comprehension. Pasted below is the entry in my "new ideas" note in Obsidian:

[[Turn prototype]] - different characters with different movement tech?

- one can move the environment itself (as described), this is the only way they can navigate the space

- one can grip the air as an instant handle, to launch from, spin around, etc. but obeys gravity

- limited number of handles can be used while in air, touch ground to reset

- maintain rotation so you can do stuff sideways, upside down

- snap to upright when hitting the floor

- in multi other players can also grab the hooks

- one can traverse in zero gravity but has to push off objects - already done in echo vr

- character 1 could bounce them around in a room

- the beings of recreation characters???

- the three were tasked with finishing the Navigational Quiz, an obstacle course humanly impossible to complete. Countless beings had tried, but only these three figured out methods of manipulating space itself, meaning they could finish the test and officially "surpass" humanity.

- First level: you play as A, running your 8 billionth iteration of the Quiz. Like every other time, you manage to pass the first few obstacles with above-average running , jumping, and bullet-time focus (demonstrate basic controls). Eventually you reach where no one has crossed, it's just a very deep chasm that goes on for 100 meters. As you jump, you pause and take the time to calculate a solution, and you figure out the first ability: turning the entire space around you. You do so for the first time, and walk on the ceiling towards the end.

- You then do the same, playing as B (using space handles to launch your self far enough), the playing as C (pushing off the back wall and the side walls and floating to the end).

- they were nicknamed "heaven's few", both idolized and hated by mankind. they had not yet entered the real world, but were in a physically realistic simulation environment run by scientists at MIT. once they passed the test, it made the news and caused widespread shock. the government demanded they study the neural networks and datasets that could reproduce the results, to realize the experiment in real life.

- levels would involve enemies being replicas of the characters, using the same movement mechanics

- The first one was deemed too powerful and forced into a "coma" by the government, unable to perform tasks but continuously having "dreams" of the human data it was trained on. The other two are tasked with exploring its dreams and investigating its true capabilities and intents.

- the dreams are distortions of everyday human spaces - infinitely random pools, apartment stairs, hotel hallways

Hits on a lot of cliches and tropes, but could maybe be an interesting game right? And they say it's all about the execution, so... maybe this could be good if the execution is good.

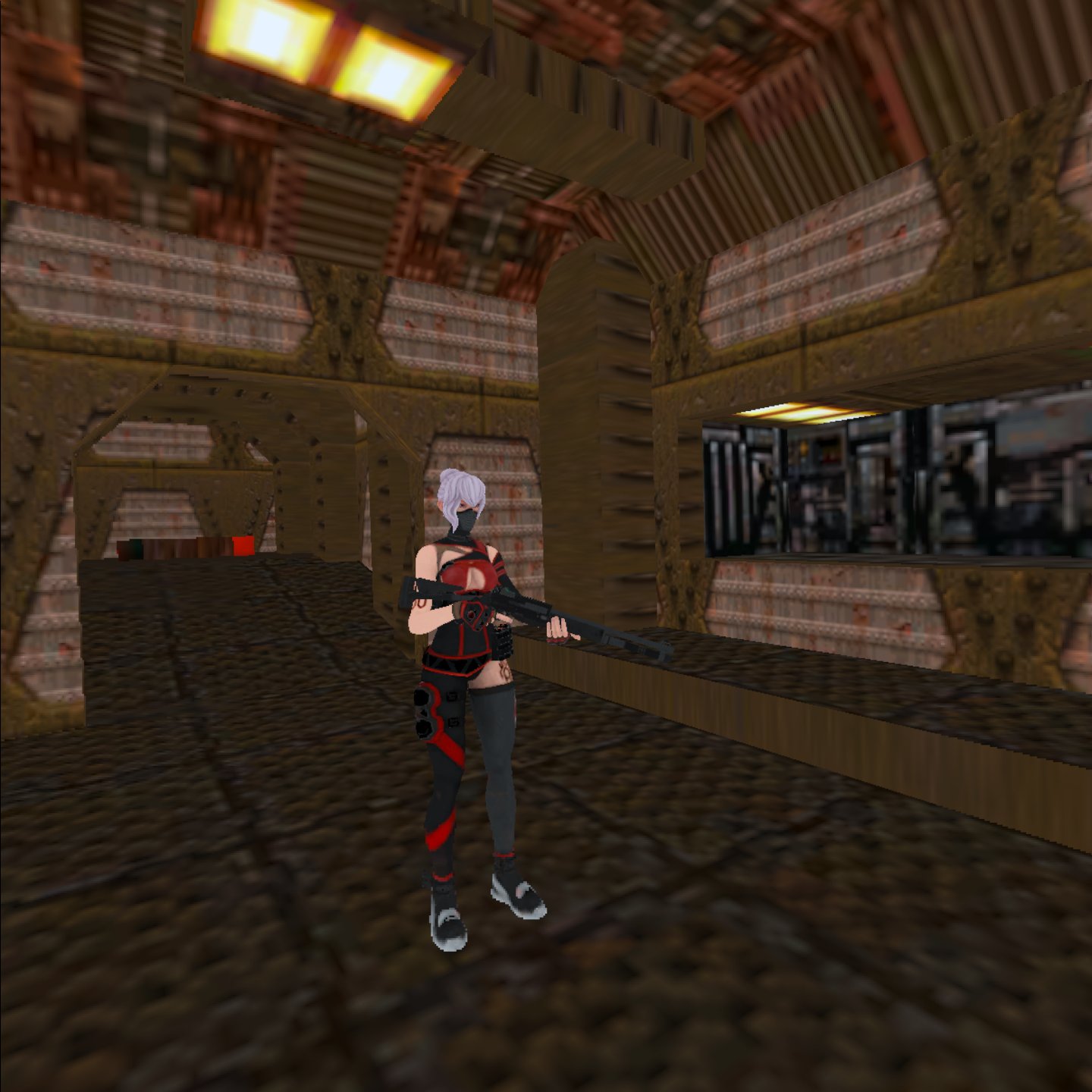

A month later in late August 2023, I produced another prototype. This one was in a big box like the last one, but now it had a concrete texture and it was more of a physics playground with stairs, tunnels, ramps, etc. so that I could have more fun shaking the room around and seeing what you could do by throwing around some physics cubes and balls. I think this is the most "fun" prototype I've had so far, at this point my janky implementation of the mechanics was playable enough (I switched back to VR controllers instead of hand-tracking, for better accuracy) and I integrated the Auto Hand Unity addon as the foundation for the VR interaction mechanics - I could climb walls, grab and throw objects, and float and walk around as I pleased. I iterated on this prototype until mid-September (link).

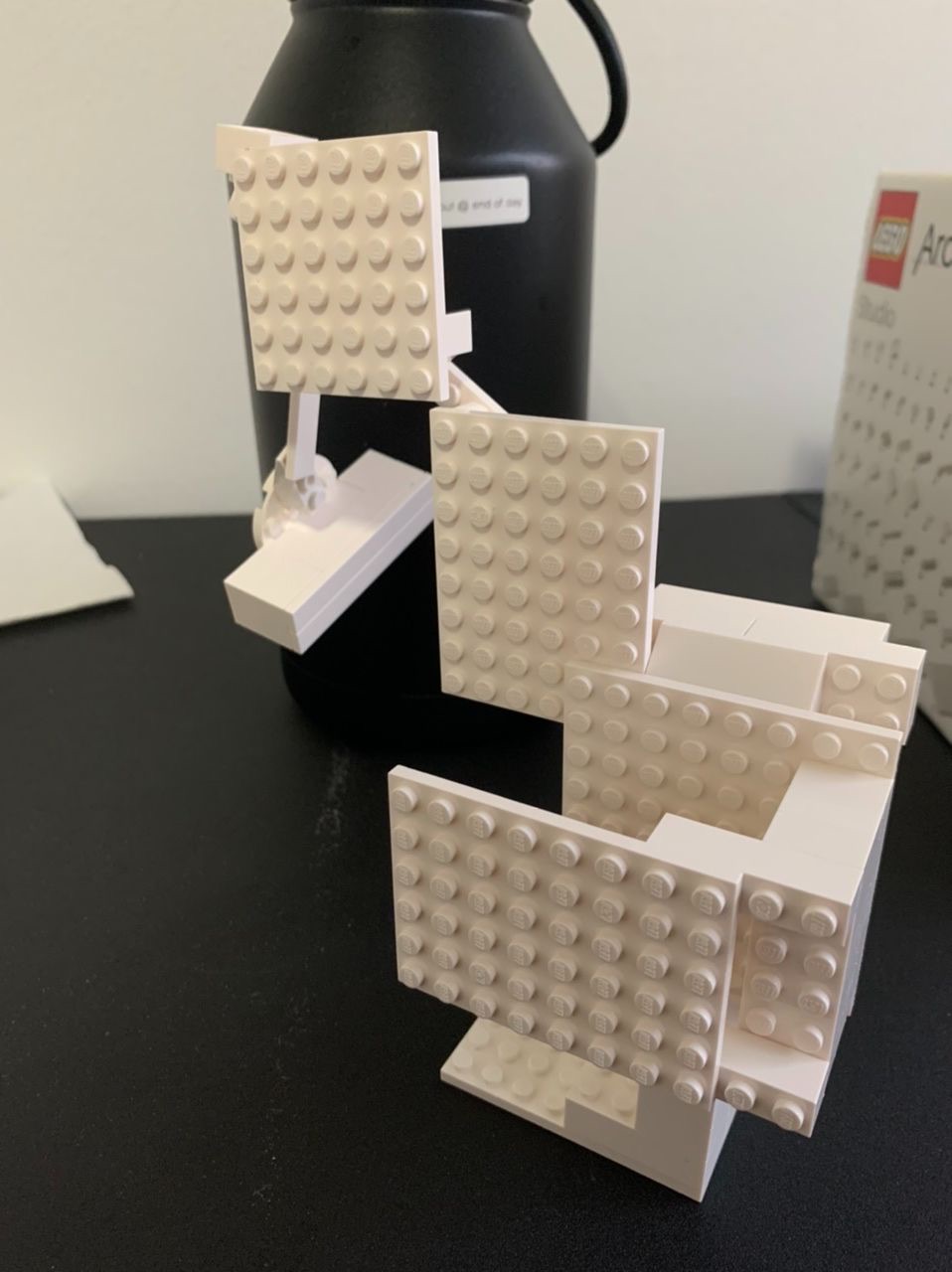

Some concept art around this time in Pixel Studio

The last Unity prototype, and the big decision

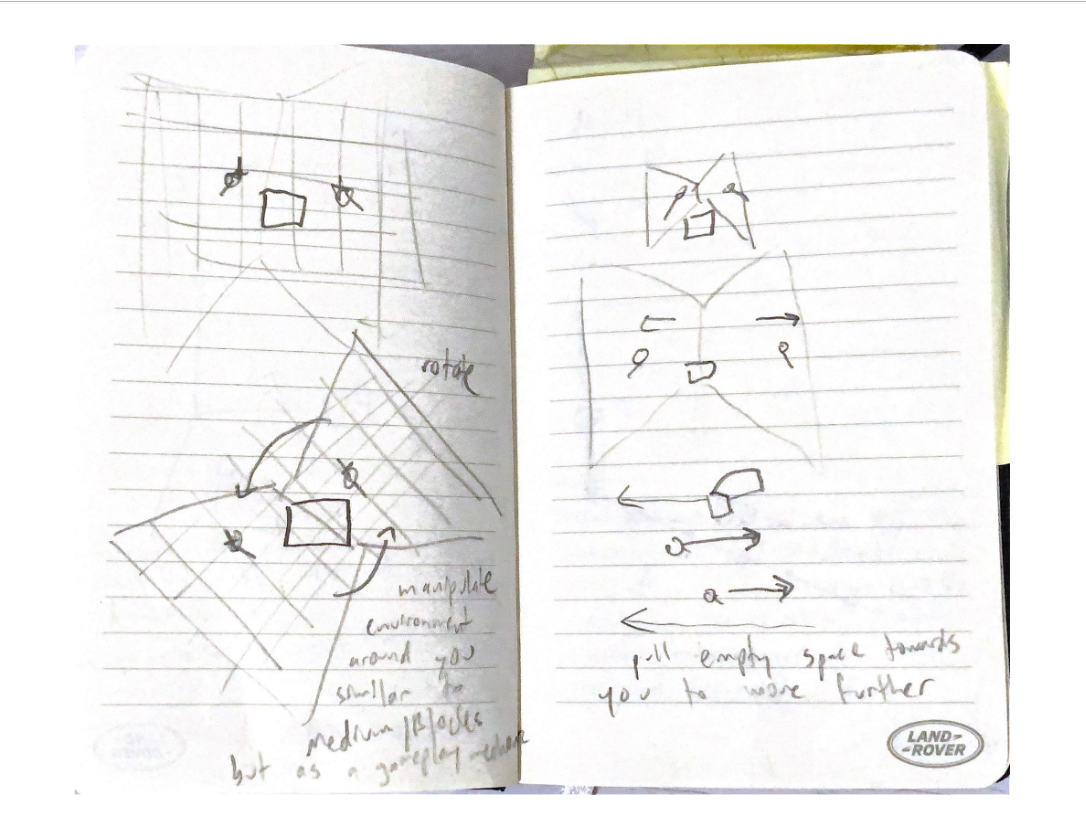

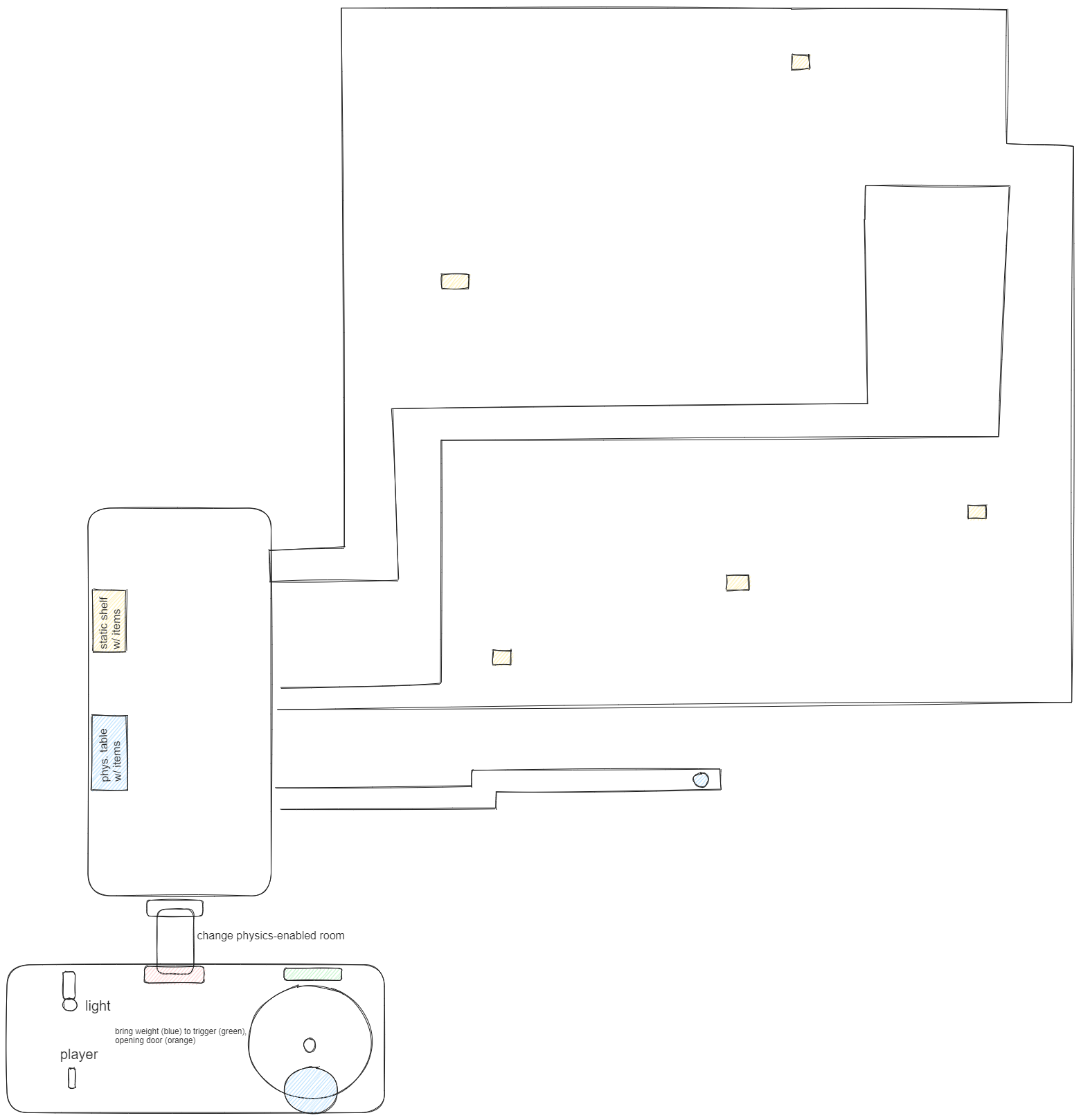

After the 'concrete playground' prototype it seemed like it was time to try making a whole level, with more than one room and some sense of progression. I sketched out a layout in Excalidraw and began mapping it out in Unity; I wasn't fully on Trenchbroom yet for level mapping, so for this I think I used UModeler. The level would have big spaces where the player would float around and learn to launch off walls to bridge large gaps, and have some other ideas like spinning a weighted, heavy wheel by rotating the entire level.

Making a multi-room level as opposed to a single box room started to strain the 'jank debt' that I had accumulated by bootstrapping on top of the Auto Hand addon and implementing my game mechanic in a weird way. The two worst examples of this were that

- the easiest way I could move and rotate the entire level based on my hand movement was... parenting the entire level GameObject under the left hand GameObject. This was weird and definitely not scalable to a level with many GameObjects, but a more apparent technical issue came about from the fact that I'm, well, moving the entire level constantly. This led to #2:

- the level not being static meshes meant no baked lighting, and purely realtime lighting would be a performance death sentence, not even mentioning the fact that I was building for the Quest mobile platform. And for reasons that are too specific to explain, every collider required TWO colliders, because the Auto Hand hands could not grab onto a collider with a certain property that I needed, so EVERY MESH had two colliders on it. This was largely because Auto Hand had a different approach to climbing than what I needed, and didn't naturally support free-climbing walls (like the climbing in Boneworks), and definitely would not accommodate free-climbing walls that weren't static.

Despite this, I figured that I would have this fleshed-out level prototype in hand that I could show people, and after I finished building it I could start the project proper and build a workflow that is performant and easier to build levels for. At least I would have this prototype that I could play around in, and refer to while building the real game.

This was around September 2023. And if you are familiar with Unity history around this time, you will probably not be surprised by my decision that month to throw out the years of experience I had learning Unity, and switch to another game engine.

September 12 2023 was when news broke of Unity's new plans for a 'pay-per-install' fee that, in the initial announcement, had implied that it would apply to all versions of Unity past and present. Read an archive of an article about the announcement here. The important thing was that this scared a lot of independent and small game developers, and eroded an already eroding foundation of trust that indies had with Unity, because the initial terms of the change had implied that any small developer with a Unity game would be hit with large fees if their game one day went viral and received millions of downloads, even if the game was completely free-to-play. Yes, they eventually rolled back these terms to something much more reasonable and even introduced some benefits for small-scale devs in the change, but even this rollback caused concern as it demonstrated how easily the company could - and more importantly, did - change the terms of their relationship with developers based on the whims of senior-level executives.

I remember seeing that the developers of Slay the Spire fully committed to leaving Unity after this and switched to Godot for their future projects, and it forced me to do the grown-up thing and seriously think about what I needed for building Heaven’s Few long-term. I was thinking about the best way to keep this project healthy over the next couple years, and not land it in a situation where its chances of future success are affected by the health of its technical bedrock, the game engine itself.

I still miss how fast I could prototype and iterate in Unity, how well it ran on my Macbook, the fast startup, the Asset Store, the tutorial community, and the strong, still unmatched support for VR and especially Quest titles. But at the time, this news worried me the more I tried to imagine myself and the game in, say, 2027. And if this caused a large part of the community to move away to another engine, I honestly just wanted to use the engine with the best community, since I needed all the external help I could get. On top of this, the technical debt of my prototypes had me realizing that creating a production-level Unity game is a very, very different beast than prototyping, and would require a lot of discipline to avoid a whole array of known pitfalls that plague independent Unity developers. I still didn't know enough about game engines and the game development process to make a fully informed decision, so I just thought: which game engine had a community like Unity's, was popular enough so that I had plenty of examples to learn from, and had a history of developer trust and long-term support? I had played around with Godot a little before this, and was really impressed by the UX and just how fun it felt to use, but I didn't see myself as one of the early adopters for its 3D features. Godot was still too new to the point that building a production-level game on this for Quest would mean being the first to run into obstacles that have already been addressed in more mature engines.

So, instead of Unity or Godot, even though there weren't many Quest or mobile games running this, and most of its new features were irrelevant to VR, and many recent games on this engine had very concerning issues with in-game stutters (which absolutely must not happen in VR), and the UI/UX looked overwhelming compared to Unity and Godot, I started to look into Unreal Engine 5.

I wasn't the only one doing this, and there were a lot of new resources that taught Unity devs how to transfer most of their engine knowledge to Unreal's paradigms. Unreal seemed promising, but the only way I would know for sure was if I dove straight in and worked on a project inside it. So, I resolved to keep using Unity to iterate on this 'level' prototype until it was stable enough to play through, and once I had a build of that prototype that I could play around in, call it quits with Unity and build a 'vertical slice' of the game in Unreal, that would continue the last prototype's goal of demonstrating a look and feel for the game that would give an idea of what the final game could be like. My last recording of the last Unity prototype was on November 1 2023 (link).

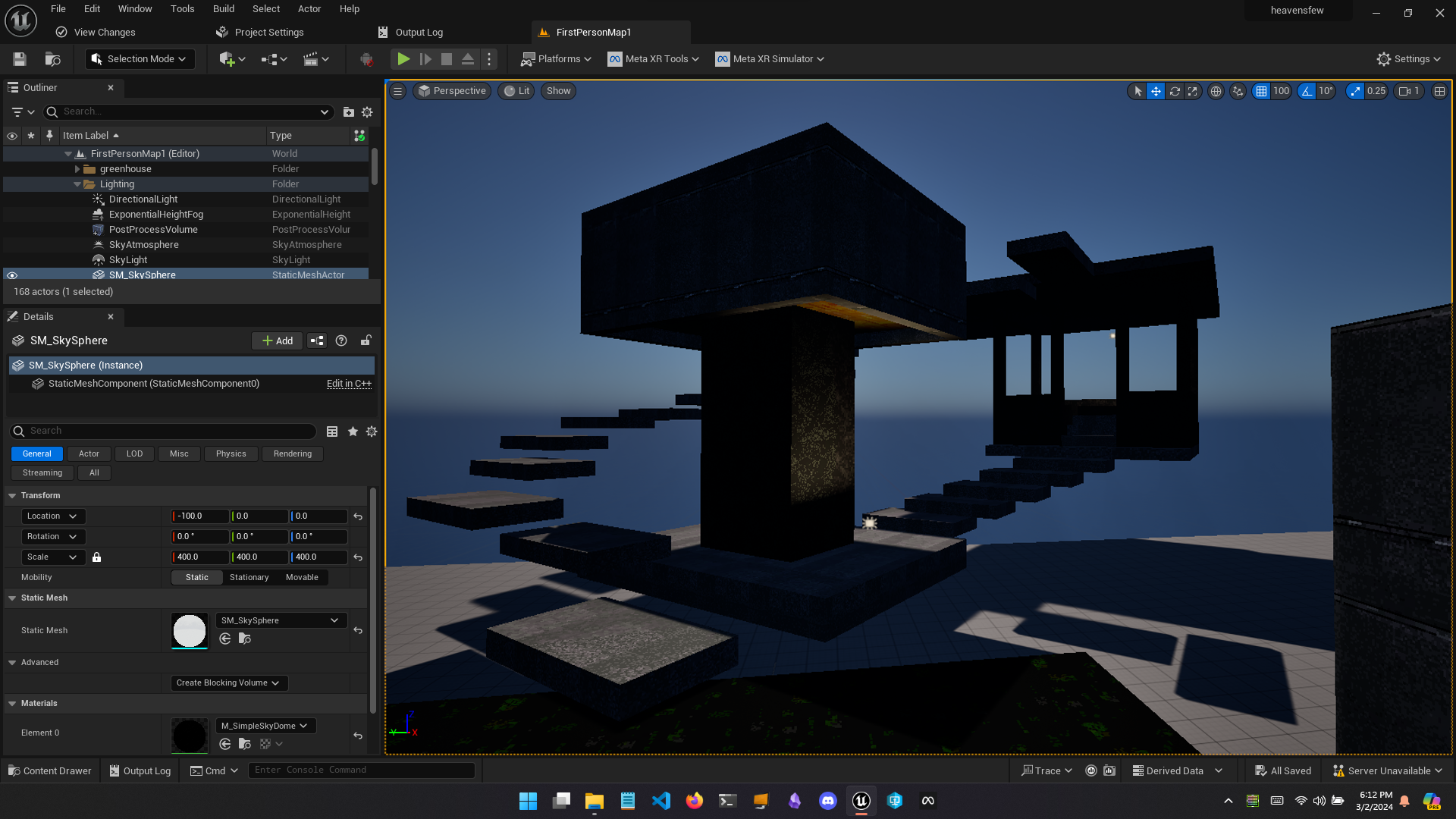

By November 26 2023, according to my Discord message history, I was able to get a playable VR project in Unreal Engine 5.3 built and running on my Quest 2.

2024 (so far) has been a lot less dramatic than everything you just read above. That stretch of time between July and November 2023 was when a lot of formative work happened, and seeing my 4-year-old idea finally come to life for the first time is a feeling I will always remember.

2024: Unreal Engine 5.3, storybuilding, the foundations of a production workflow, and what's next

VRExpansionPlugin

For now, my approach to learning Unreal Engine has basically been to dive straight back into the game and try making what I need to make, and throughout the year I've also gained additional experience by doing a couple side projects in the engine.

The first thing I needed to quickly onboard to VR was to see what kind of VR frameworks were out there for Unreal, similar to how I used Auto Hand in Unity. There is an official VRTemplate that provides basic functions like grabbing and teleporting, but I found that a couple popular VR games made in UE4 used an open-source plugin called the VR Expansion Plugin; the big examples are Ghosts of Tabor and Into The Radius VR. What gave me confidence to choose this as the foundation for my own project was that, although both of these games started as PC VR games, they were both ported to the Quest and they both run relatively well on it. On January 6 I uploaded this recording of me in a box level, using the VREPlugin character, and testing out a really, really broken version of level rotation (link):

Setting up AWS and a Perforce version control depot

Another crucial element of building a big piece of software is version control. While Git is the go-to for most people and what I’m familiar with, Unreal’s built-in version control features seem to work best with Perforce, so I went with that since I didn’t want to fight the engine too much. What pushed me to finally get this set up was the fact that I’ll eventually want more people to work on the project, and once I have this set up, sharing the project would be as simple as adding a new Perforce user and sharing the server details. Well, kind of. I’m using the pre-built version of 5.3, and for reasons I’m forgetting right now, any contributors would also have to download the same binary and setup third-party plugins themselves before pulling in the project (I think I have the VREPlugin as an engine-side plugin?). Once things are set up though, my future team members should be able to push and pull project updates easily.

Even though it’s a bit more expensive and unnecessary in this stage of development, I opted to set up the Perforce server in a new AWS account I set up for any cloud resources the game might need. I undershot the hardware requirements at first and the server struggled to ingest the initial commit with the entire project folder, so I had to restart the setup process with a host with more CPU and disk space. Since it’s just me working on it right now, if I’m not committing any changes to the server I just stop the EC2 instance to save on cost. I was also granted $300 in AWS credit from some promotional email and I think I’m still using that.

Trenchbroom to Unreal

This time I wanted to start building and playing in maps that actually look decent, so I started looking into importing Trenchbroom maps to Unreal. Thankfully this is a workflow already covered by other developers, using the HammUEr plugin. The creators of a game called GRAVEN posted a guide on their steam page of how they used Trenchbroom to create production-ready levels in UE4, and this helped me get some of my old Trenchbroom maps into UE5.

What about textures?

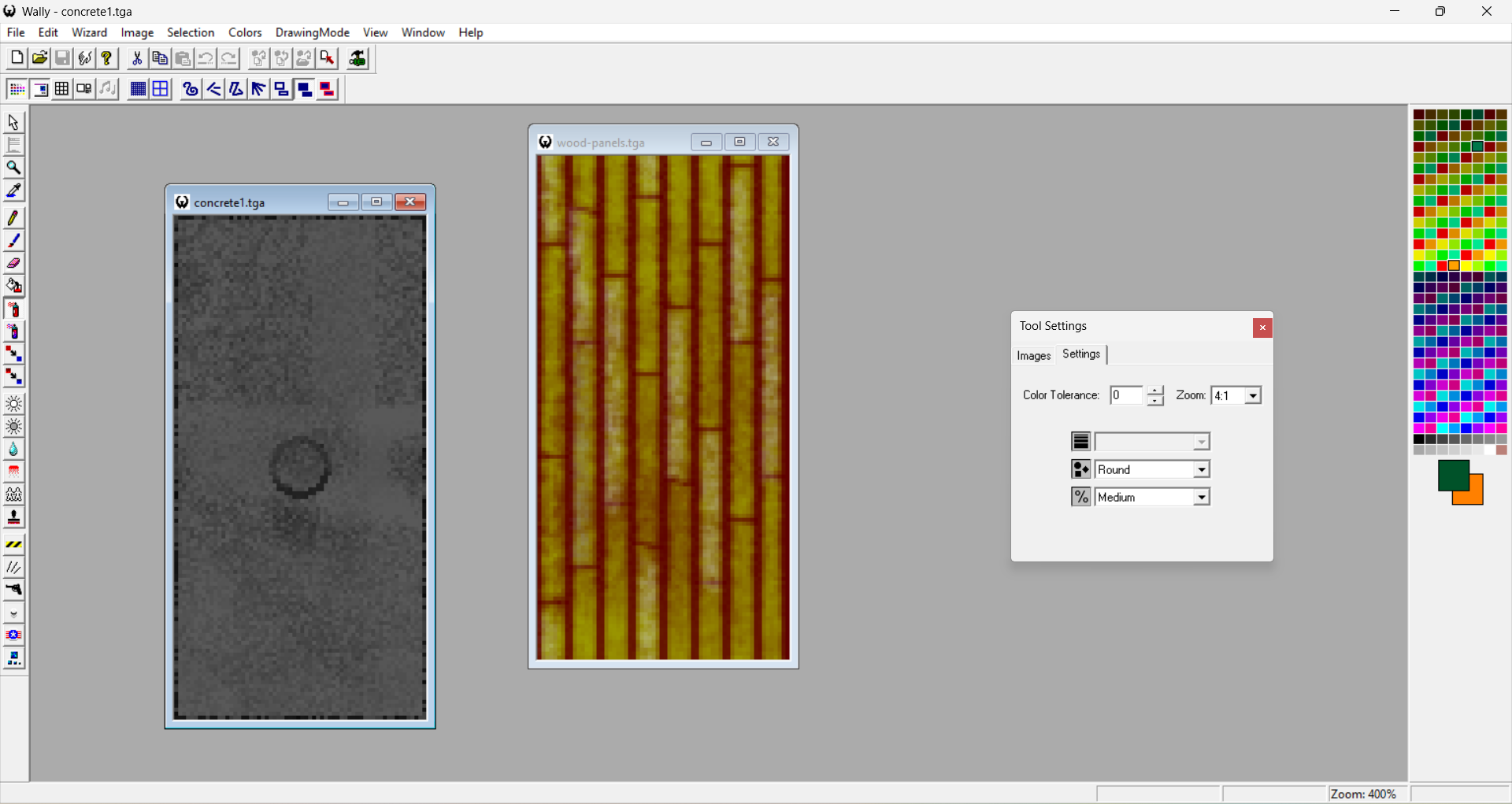

I have been using an old texture manager called Wally for making collections of low-res textures that maintain a consistent tone thanks to the color range limitations imposed in order to stay within the supported color palette of the old games it is intended for, like Quake. It has just enough art features to make materials like wood, steel and concrete, and has a couple features like stamping and tinting that make it easy to add natural detail.

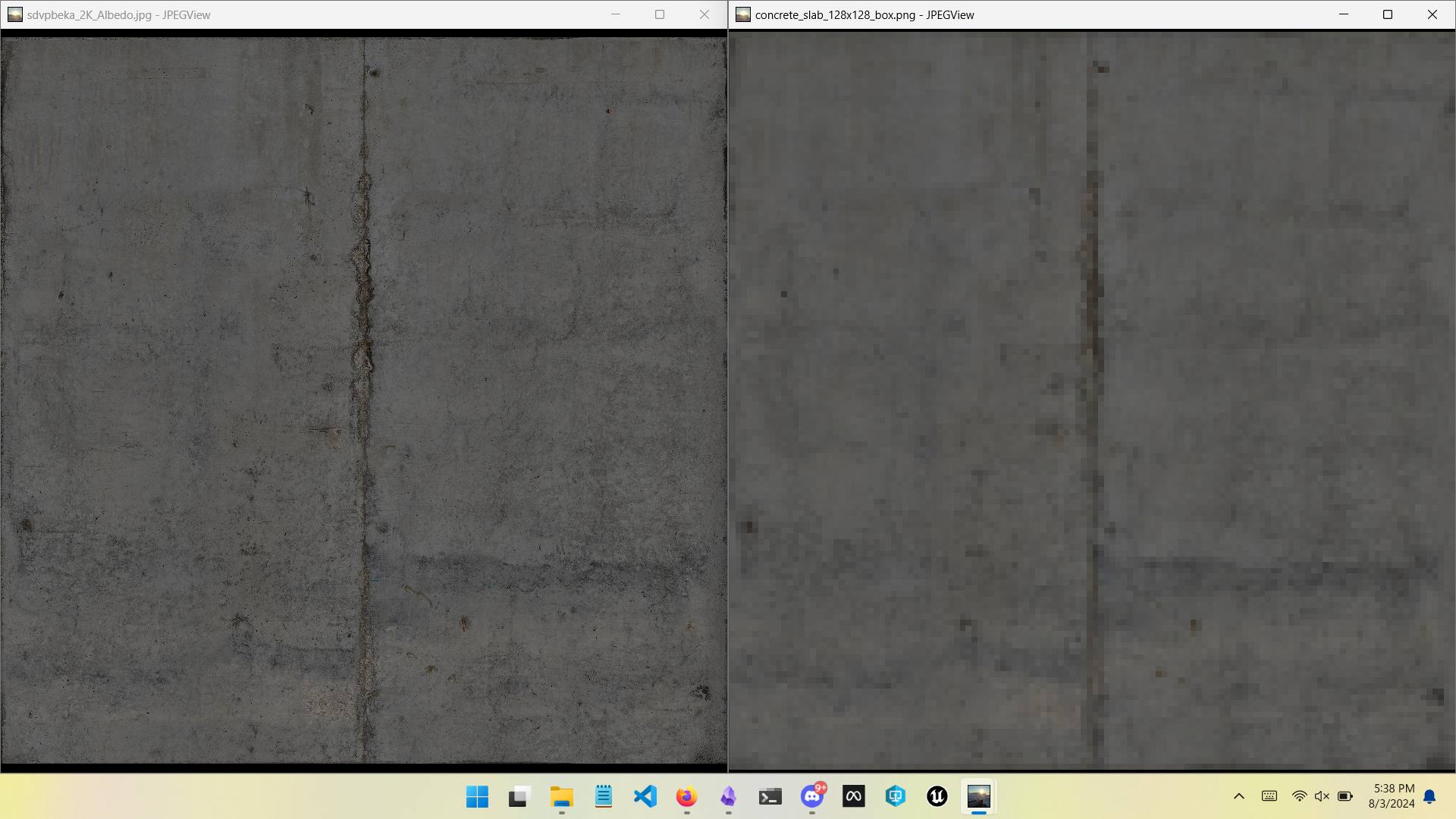

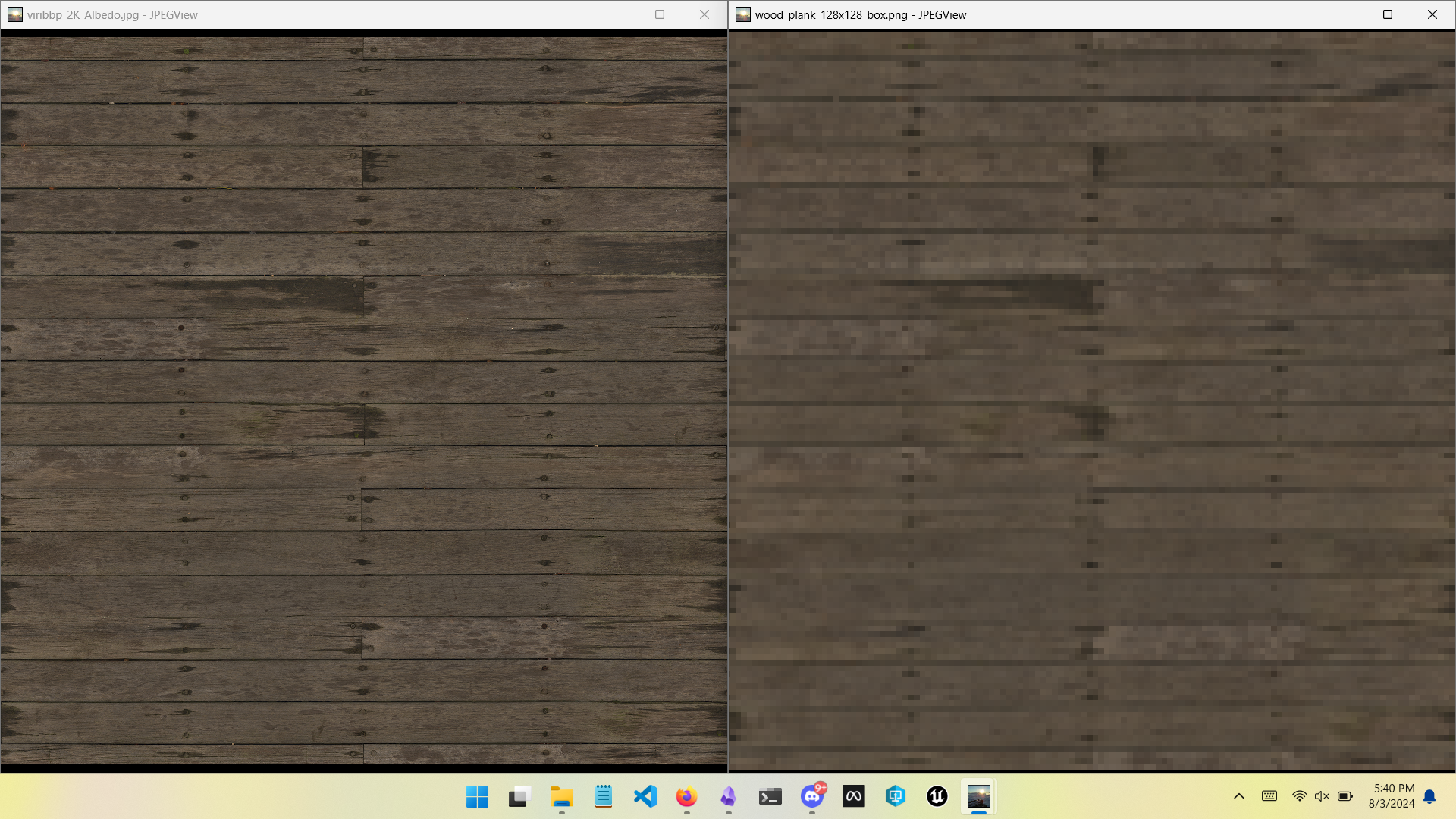

More recently though, I have been pulling the 2K resolution textures from Quixel Megascan materials and downsizing them using Box filter on ImageMagick, and the results give off that grimy Half-Life 1 look that I'm going for.

The look I've been going for right now is 128x128 textures with no filtering and no compression; I'm keeping it low-res to imply a lot of detail, and removing filtering so that the textures don't look all soft and smeared over. This might end up causing a lot of aliasing on high-contrast edges that might be distracting in VR, so I'll adjust this as I continue to iterate on the art side. For now though this seems like an easy way to produce a consistent aesthetic and quickly make different types of surfaces. It also means that I don't have to worry about texture memory at all.

magick ./concrete_slab_2k.jpg -filter Box -resize 128x128 concrete_slab_128x128_box.png

Story developments

I have been able to come up with a lot more ideas regarding the story, mainly the big themes I want it to convey, the setting and how it would be structured. I'm trying not to just shamelessly copy stories that inspire me, but this year I finally got around to watching Evangelion, playing Metal Gear Solid 1 and 2, OMORI, growing my class consciousness and thinking more about the emotional and spiritual damage that comes with otherness, alienation, and lack of unity. All these themes are gelling into something that is starting to make sense in the little world I'm creating here.

Something interesting that I want to try to explore is how AI is trained on a wealth of human input, but cannot personally connect to that broader human experience in the way that people are supposed to when they are also trained on human input. It is trained and 'raised' like us but it can never be us, it's very weird and it's obvious that most AI companies are led by people who can't tell that it's very weird. I'll keep working on this idea and see where it goes. I kind of worry that this 'AI is like humans but not treated like humans' thing will go too far in the way that it did (IMO) in Detroit: Become Human, which just directly re-enacted multiple types of historical oppression like segregated buses and border patrol, but replacing the victims of these systems from real life with AI androids. Some parts of that game are interesting though.

What's next?

That's pretty much all I'm comfortable sharing now, work has been really eating into my time but I'm still focused on getting out a vertical slice that represents a compelling version of the game in Unreal. I am having issues fully implementing the level shifting mechanic in the same way I did in Unity, and am seeking help for implementing this. If I can't get that done soon enough, I am just working on a playable level that utilizes the gameplay mechanics that I already have working, like hands interacting with physics objects and floating around in zero-gravity.

I need to find an efficient way to model everything else other than maps, Trenchbroom kind of only works for mapping and nothing more detailed like props and characters. I've been using Blockbench in another project and it seems like the way forward especially since I'm committing to a relatively low-poly, low-res aesthetic. It has built-in, straightforward texturing and UV tools which is really important for saving time.

Going back to the Cerny talk about "Method", it made me look back at the meandering path I've taken to exploring this game and appreciating more and more the fact that I'm not jumping into "make the full game" mode too soon. Keeping this a solo endeavor allows me to maintain the freedom needed to experiment and iterate, until the idea is ready to grow into something more cohesive. I value the lack of external pressure I've had in this process; doing this type of exploration in a "real" game dev team would require asking for money that few investors would want to give, and it would impose a pressure to move out of this non-linear stage ASAP into something more quantifiable. It's not perfect of course, choosing this path means that I have to do unrelated work full-time like a normal person and barely have an hour or two per day to focus on the project. But it gives me a lot of time to think, and I'm getting more world experience that I can pull into this, and I believe it will result in a more meaningful game in the future. But, if I get the chance to start working on the game full-time, I will gladly jump into "make the full game" mode sooner, and I acknowledge that the final game will definitely not include everything I want in it, and may not even be a 'good game', and while I'll try my best, I'm mostly okay with that.

Thank you for reading this. The game is still in very, very early days and progress has been very slow and wandering over the past five years. This project is kind of like a 20% of my life that I haven't shared with anyone (except Mark), and this post is a record of that 20% to finally share with others as well as to collect everything for myself; I forgot a lot of this stuff until I went back into my photos and Discord history and old notes. Hopefully I make enough progress to write another one of these sometime soon.

In the meantime, here is my latest prototype:

And here is some recent concept art: